What smart contract analytics means for legal teams

Here “smart” doesn’t mean blockchain code—it means smarter analytics for contract documents. The goal is to turn language into metrics, trends, and briefs your teams can actually use: clause coverage, exception rates, renewal exposure, obligations by owner and due date, and variance from your playbook. Dashboards give leaders the picture; briefs and tickets move work forward.

Data model: clauses, entities, and events

Analytics rests on a clean data model. Clauses are typed and versioned, entities capture parties/dates/amounts, and events track renewals, notices, audits, deliveries, and approvals. Once those three are reliable, filters and cohorts (by product, region, segment, or counterparty) start to sing.

KPIs that matter (and ones to skip)

- Exception rate by clause and business line.

- Renewal exposure in the next 30/60/90 days.

- Clause coverage vs. policy (e.g., audit, SLA credits).

- Time‑to‑close (and where it stalls).

- Variance from playbook by template vs. third‑party paper.

Skip vanity charts. If a metric doesn’t change a decision, it’s noise.

From clause detection to portfolio trends

Clause-level analysis is useful, but the big payoff is portfolio views. For example: “Which customers have liability caps below 12 months’ fees?” or “Where are we missing IP infringement carve‑outs?” Cohort comparisons make negotiations easier—“market” claims are easier to test when you can show distribution.

Renewals and obligations tracking that actually prevents surprises

Analytics should feed a tracker where owners and due dates are obvious. Anything with a clock—renewals, audit windows, termination notice periods—belongs in a queue with reminders. Connect ticketing so owners get tasks with context and links to the clause that created it.

Variance analysis: how far are we from preferred language?

Use similarity search to compare live clauses to your library. Show an acceptability label, a diff, and a short suggestion. Track variance by region or product line to spot systemic issues (e.g., one template consistently ships without a data‑security carve‑out).

Dashboards leaders care about

Leaders want trend lines and risk exposure, not wall‑to‑wall detail. Give them three boards: Renewals (90‑day pipeline with risk notes), Exceptions (top five by frequency/impact), and Coverage (policy compliance by template/region). Everything else should be drill‑down.

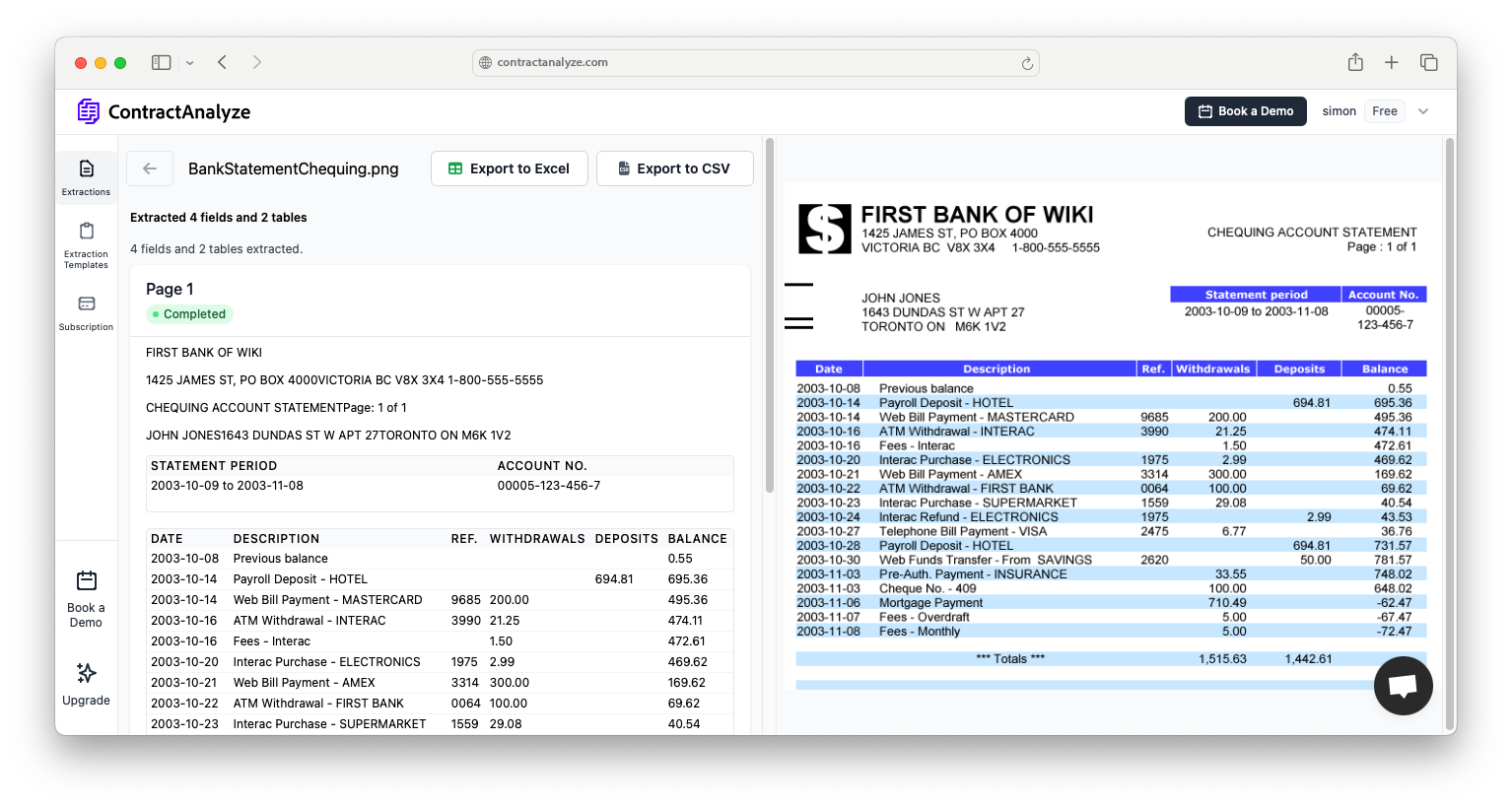

Getting data out of PDFs (and keeping it trusted)

Scans happen. Put OCR/cleanup front and center and measure separately for scanned vs. digital PDFs. Keep a “view source text” link everywhere so people can check the sentence that created a metric. Traceability is how analytics earns trust.

Security, privacy, and retention

Use encryption in transit/at rest, role‑based access, audit logs, regional data residency, and retention controls (including zero‑retention modes). If you handle personal data, document redaction paths and how PII minimization works.

Rollout: a 30‑60‑90 plan

0–30: Stand up extraction for two doc types; define KPIs (exceptions, renewals). 31–60: Wire dashboards, connect ticketing, and run weekly owner reviews. 61–90: Expand cohorts, publish accuracy and SLA targets, and start quarterly reviews with business stakeholders.

Common wins (and how to measure them)

Teams typically report fewer surprise renewals, faster approvals (because exceptions are known earlier), and clearer negotiation stances backed by data. Measure before/after on missed renewals, average time‑to‑close, and exception rates for 3–5 key clauses.

FAQs

Can we customize dashboards? Yes—by product, region, owner, or counterparty. What about scans? Supported; track accuracy separately. Will this replace counsel? No—it gives them cleaner signals and fewer fire drills.